3D Endoscope to Boost Safety, Cut Costs of Surgery

NASA Technology

“A lot of things are not easy to solve when you’re trying to break through a new technology right from the get-go,” says Harish Manohara, supervisor of the Nano and Micro Systems Group and principal member of the technical staff at NASA’s Jet Propulsion Laboratory (JPL).

Trust a supergroup of rocket scientists and a brain surgeon to make it happen, though.

In 2007, Dr. Hrayr Shahinian was looking for an engineering team to help him develop an endoscopic device suitable for brain surgery and capable of both steering its lens and producing a three-dimensional video image, when he discovered that the person he happened to be seated next to at a social function was Charles Elachi, director of JPL. Their discussion was the spark that eventually put Shahinian in touch with the JPL team.

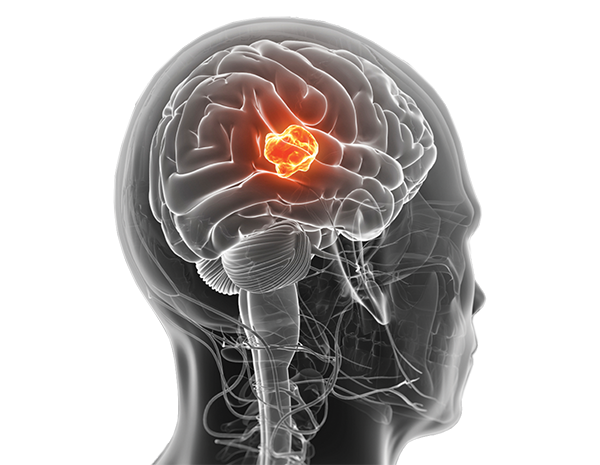

Director of the Skull Base Institute in Los Angeles, Shahinian helped to pioneer minimally invasive, endoscopic brain surgery in the mid-1990s. As the industry shifted away from open-skull operations to endoscopic techniques, in which a tiny camera and tools are inserted through a small hole, the risk of complications for most surgeries plummeted, as did the length of hospital stays and rehabilitation time. The change was not, however, without its drawbacks.

“It became obvious to me, even 15 years ago when I was converting to endoscopic, that we were losing depth perception,” Shahinian says, noting that no matter how high-definition the image an endoscope may produce, it’s still flat, making it difficult for the surgeon to see how close the tumor is to potentially critical nerves or tissue behind it, for example. “I realized that 3D endoscopy is the future.”

The problem, though, was that this sort of brain surgery is carried out in exceedingly close quarters, so that the device couldn’t be more than four millimeters in diameter. This ultimately ruled out the possibility of using dual lenses to create a 3D image, although Manohara says his team explored the idea.

NASA, too, often wants to get high-quality images, he says. “Whenever there are optics and 3D imaging involved, it can be adapted for planetary exploration.” For example, Manohara says, on a rover, such a camera could peer into the opening left by a rock core extraction. “There is lots of interest in terms of assessing geological features, and 3D is often better than 2D.”

On a shuttle or space station, he adds, the instrument could be used to look for a fault or fracture in machinery that’s hidden from view. In this case, a 3D image would make it easier to assess the nature of the damage.

Seeing this common interest, NASA entered into a Space Act Agreement with Shahinian, and he obtained a license for the technology. “He had a very specific need and knew exactly what he wanted,” Manohara says. “The problem was well-defined, but the solution was not there.”

After the dual-lens model was scrapped due to poor image quality at such a small lens size, the team hit on another idea: two apertures with complementary color filters, incorporated into a single lens.

Just as humans and other animals process two images from the slightly different viewpoints of their eyes to perceive depth, 3D imaging requires two viewpoints.

“If it’s a single-lens system, we still have to somehow create two viewpoints of the same image,” Manohara explains. “It was a question of brainstorming.”

To create a fully lit image that can be viewed with standard 3D glasses, the team relied on color filters. Each aperture filters specific wavelengths of each of the red, green, and blue spectrums that are not filtered by the other aperture. These together comprise white light. The light source, a xenon cold lamp, cycles rapidly through these six specific color wavelengths in sequence, with only half of the reflected light passing through each aperture.

“You’re creating two separate, fully colored images,” Manohara says. These are then run through the standard software used to create and display images suitable for viewing with the same polarized 3D glasses used in movie theaters.

The other challenge to overcome was Shahinian’s specification that the end of the camera be able to steer side to side. To date, the endoscopes available to brain surgeons are all either straight-looking or fixed-angle.

Manohara says the tight restriction on diameter made it difficult to install an automated joint in the device. “You have to have illumination in there, and you have to have data and power cables in there,” he says. “You have a lot of wires inside, and the room available is very small.”

However, the team succeeded in enabling the camera, which is controlled with a joystick, to turn 60 degrees in each direction. Manohara credits Sam Bae, also of the Nano and Micro Systems Group at JPL, with much of the system design and integration, and he says optical engineer Ronald Korniski made the lens work.

Technology Transfer

In December of 2013, Shahinian had the first prototype of the Multi-Angle Rear-Viewing Endoscopic Tool (MARVEL) in his hand. Two more stereocamera prototypes have since been built, and the technology is being prepared for submission to the Food and Drug Administration for approval for medical use. Shahinian says he expects to have the device in use by early 2015.

He says he wants to be the first surgeon to use the MARVEL in his practice but expects that this advancement will soon find widespread application. “This technology with MARVEL will apply to all types of endoscopy,” he says. “Brain surgery is a very small niche.”

Benefits

Shahinian says the improved visibility while performing minimally invasive surgery will improve safety for many types of operations, speeding patient recovery and, ultimately, reducing medical costs.

“It will help to prevent things like damaging structures behind the tumor that are hidden from you,” he says. “The better you can see something, the safer the procedure will be, and the fewer complications you have.” Reduced complications and quicker recovery lead to lower costs, he adds. “The largest cost in our health care system is really hospitalization.”

As a naturalized citizen, he says, to the extent that the device advances NASA and other government technology, “Then I feel grateful that I’ve given back something to this country after what it has done for me.”

Shahinian emphasizes that it was a NASA partnership that made the new device a reality.

“Yes, I did have considerable input, but I could not have done it without the NASA team, obviously,” Shahinian says. “As exciting as it is to explore other planets, I personally believe NASA has a great role to play right here on our planet.”

The Multi-Angle Rear-Viewing Endoscopic Tool (MARVEL) is the first endoscope suitable for brain surgery that is capable of producing three dimensional imagery.

The tool was produced through a joint effort between NASA's Jet Propulsion Lab and the Skull Base Institute in Los Angeles.