Conversing With Computers

Originating Technology/ NASA Contribution

I/NET, Inc., is making the dream of natural human-computer conversation a practical reality. Through a combination of advanced artificial intelligence research and practical software design, I/NET has taken the complexity out of developing advanced, natural language interfaces. Conversational capabilities like pronoun resolution, anaphora and ellipsis processing, and dialog management that were once available only in the laboratory can now be brought to any application with any speech recognition system using I/NET’s conversational engine middleware.

The conversational interface technology allows people to control computers and other electronic devices by speaking everyday natural language. Unlike voice recognition systems that substitute sounds for isolated commands, a conversational interface system enables extended conversations with a shared, changing context. For example, while driving, a person might ask the car, “What song is playing?” When the car responds with the name of the song on the CD player, the driver might say, “Turn it up.” The car needs to infer that “it” means the volume of the CD player even though the CD player has not been explicitly mentioned. If the driver then says, “That’s too much,” the car needs to realize that its volume adjustment was too large and it should be turned down a bit.

Partnership

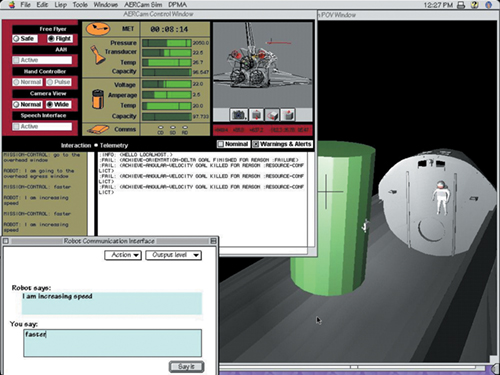

In 1995, Dr. R. James Firby’s work on robot control and natural language at the University of Chicago attracted the attention of Jon Ericson, a NASA engineer working on the Extra Vehicular Activity (EVA) Retriever project at Johnson Space Center. The next year, Johnson granted Neodesic Corporation, the company Firby cofounded, a Small Business Innovation Research (SBIR) contract to build a natural language system for robotic assistants in space.

Neodesic developed the Dynamic Predictive Memory Architecture (DPMA) system, which was used experimentally in conjunction with NASA’s Advanced Life Support System Water Recycling project after the EVA Retriever project was cancelled. In 2001, Neodesic sold the language technology to I/NET, Inc., of Kalamazoo, Michigan. Firby and several of his Neodesic colleagues joined I/NET, and NASA Johnson granted the company an SBIR contract to further develop the conversational interface technology. Under this contract, I/NET worked to make the DPMA system easier to use and enable it to run on small systems such as hand-held pocket digital assistants.

In order to make the DPMA technology more readily accessible to software developers, I/NET is developing a suite of Conversational Interface Domains (CIDs). Programmers need a system that hides difficult language issues like inferring pronoun references, managing conversational context, and creating dynamic dialogues. Each CID is a prebuilt library that handles these complex processes automatically in a specific domain. Programmers describe their systems’ functions and terminology with simple, declarative forms and the CID library manages context, inference, and dialog creation to allow users to carry on natural language conversations with the system. Two CIDs with wide applicability are the Device Control CID and the Messaging CID. The Device Control CID is perfect for building conversational interfaces for systems made up of devices and properties. Examples of these include: automobile controls, stereo systems, life-support systems, machine tools, and robotic systems. The Messaging CID is tailored to systems that manage collections of messages such as alarms, e-mail, or checklists.

For example, if a heating, ventilation, and air conditioning (HVAC) automation supplier wants to add a conversational interface to an environmental control room, the Device Control CID can be readily adapted to control environmental components. The specific details about the HVAC machines need to be supplied by the developer, but the language for turning them on and off and adjusting parameters are already encoded in the library.

Similarly, the Device Control CID can be used in automotive environments, where its wide language scope enables drivers and passengers to use varied forms of the same request, such as “Turn down the volume,” “Turn the volume down,” “Turn down the sound,” and even “It’s too loud!” The library also manages context and extended dialogues to help the system understand driver requests. For example, if the driver asks the car to “Turn on the seat warmer,” the CID library does a number of things. First, it checks to see which seat warmer the driver might mean. This check can take into account external context such as which seat warmers have people sitting on them and which seat warmers are already on, as well as conversational context such as whether the driver was just talking about a specific seat warmer. If the library cannot infer a specific seat warmer, then it will ask the driver which seat warmer to turn on. The driver might respond with a phrase like “The driver’s” or “Mine.” The library then has to infer that the driver means the driver’s side seat warmer.

Product Outcome

I/NET has commercialized its conversational technology and CID libraries for incorporation into a wide variety of systems. The Embedded Conversational Interface (CI) Toolkit and Converse Server serve as complimentary development platforms for clients to incorporate the conversational interface technology into their own products.

The CI Toolkit is written in Java™ for embedded systems such as hand-held devices and cell phones. Initially designed for the automotive telematics market, it supports all I/NET CID libraries in a very small package. The CI Toolkit was recently licensed by a company building accessible systems for the handicapped.

I/NET’s Converse Server is designed for a wide variety of computers running a number of different operating systems, and it supports many more interface options. Converse Server enables natural language applications to be deployed through Web browsers, instant messenger, wireless Web, telephone, text messaging, and custom application interfaces.

I/NET has also built its own products that use the CID libraries. The Phone Automation Manager (PAM) is an application for interacting with remote, automated systems over the telephone. It has found wide application in factory and machine tool monitoring. When a factory system issues an alarm, PAM can call a maintenance technician on the phone and explain the problem. The technician can then work with PAM using plain English to ask for more details about the alarm, check on the system status, and reset machinery if necessary without needing to come to the factory first. By working through PAM, a technician can be on call without leaving home, increasing the individual worker’s flexibility and decreasing the company’s staffing needs. PAM can also be customized to support additional requests—even to provide warehouse, stock, and order information. Thus, PAM can be used as a management tool as well as a maintenance assistant. The system was recently tested at an auto parts factory in Tennessee during a crucial maintenance period and received rave reviews.

As increasing computational power allows embedded devices to grow smaller and more powerful, it also allows voice recognition to become more reliable. I/NET believes that conversational interfaces are perfect for controlling embedded devices because they require no display and, when well crafted, help the user discover and take full advantage of each device’s capabilities. I/NET’s CID libraries offer powerful, easy-to-implement building blocks for constructing modular, user-friendly, conversational interfaces. I/NET is putting advanced interface technology to work today.

Java™ is a trademark of Sun Microsystems, Inc.

I/NET, Inc.’s conversational interface technology emerged from an effort to build a natural language system for robotic assistants in space.