Hearing in True 3-D

Originating Technology/ NASA Contribution

In 1984, researchers from Ames Research Center came together to develop advanced human interfaces for NASA’s teleoperations that would come to be known as “virtual reality.” The basis of the work theorized that if the sensory interfaces met a certain threshold and sufficiently supported each other, then the operator would feel present in the remote/synthetic environment, rather than present in their physical location.

Twenty years later, this prolific research continues to pay dividends to society in the form of cutting-edge virtual reality products, such as an interactive audio simulation system.

Partnership

Throughout the 1990s, virtual reality technology was applied to multiple areas, from video games to military equipment. William Chapin founded AuSIM, Inc., in 1998 to develop three-dimensional (3-D) audio products for mission-critical applications, such as those originally proposed by NASA.

Prior to launching his Mountain View, California-based company, Chapin joined NASA partners and researchers to develop several iterations of increasingly more-detailed, physically-based acoustic room simulation models. Over a 4-year period, they would develop three successively more accurate models of acoustic simulation.

When AuSIM came to be in 1998, Chapin would further fortify his ties with NASA. Dr. Stephen Ellis, a member of Ames’ Human Information Processing Branch, was conducting research on perceptual issues relating to latency in visual displays, along with Dr. Dov Adelstein and Dr. Elizabeth Wenzel—one of the NASA researchers who helped to develop the original virtual reality interfaces for Ames. AuSIM assisted Ellis, Adelstein, and Wenzel by integrating aural and visual displays so the three could study the inter-relationship of latency. Ames contracted with AuSIM to provide the synchronization control in the aural and visual display systems. This work would lead to a series of annually renewed contracts between Ames and AuSIM.

Meanwhile, across the country at NASA’s Langley Research Center, Dr. Stephen Rizzi of the Structural Acoustics Branch needed an auralization architecture on which he could develop his own models. Rizzi and AuSIM collaborated to make a version of the company’s technology in which sub-models could be replaced with a “plug-in” design. This “open kernel architecture” collaboration continues through 2004, with support from Phase I and Phase II Small Business Innovation Research (SBIR) contracts. Additionally, Rizzi and AuSIM produced joint research papers based on their studies of advanced propagation models and structural acoustics.

Product Outcome

While audio simulation technology has been called “3-D sound,” this same title has been applied to spatialized sound and surround sound, which are simpler technologies attempting to leverage traditional sound production techniques. Spatialized sound and surround sound are great for creating a theatre effect in one’s living room, but they do not help distinguish multiple alarms in a nuclear power plant control room, for example. AuSIM’s interactive audio simulation, on the other hand, can make a distinction between these alarms, give a fighter pilot the natural cue for an approaching threat, or allow air traffic controllers to better associate pilot voices with the planes in the airspace and taxi-ways surrounding them, according to the company.

In noisy environments such as restaurants and lobbies, people are well-adapted to tuning into desired sound and tuning out noise, a perceptual phenomenon referred to as the “cocktail effect.” Humans perceive signatures in sound from the propagation and from the source to their ears, and hence create a mental image of the environment that allows them to discriminate independently originating sounds. AuSIM notes that traditional audio technologies do not simulate the propagation of sounds through a medium and therefore present false aural signatures.

AuSIM’s solutions to help humans differentiate between simultaneous sounds are based on NASA-influenced audio simulation techniques that create and preserve the perceptual spatial clues in electronically transmitted sound. The solutions apply to military, industrial, voice telecommunications, and academic research projects.

As the company’s core technology, AuSIM3D™ gathers dynamic acoustic properties, 3-D position, and 3-D orientation of all objects to drive complex models based on the physics of sound waves. Applied to real-world tasks, AuSIM3D reduces fatigue with naturally presented information, maintains more efficient and productive workers, increases accuracy and quality of listeners’ work, yields fewer critical and costly mistakes, and saves time, money, and even lives.

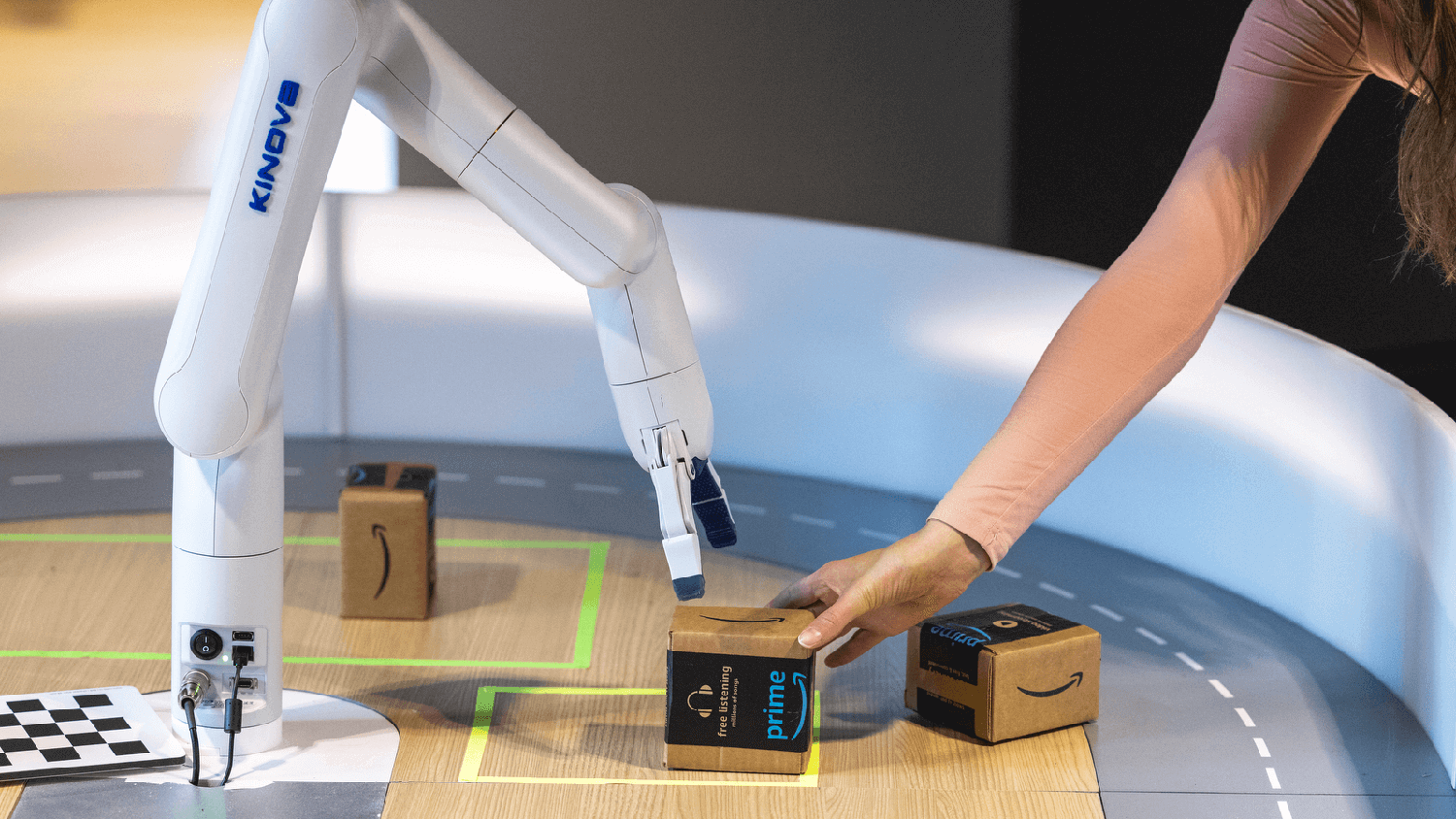

AuSIM3D and the company’s related products extend to all branches of military and security operations. The U.S. Navy initiated a next-generation destroyer project to significantly reduce the manning requirements for command and control, and AuSIM delivered over 30 systems to support this multi-model watch station project. Additionally, the Navy is using AuSIM systems to re-examine the use of aural sonar displays. Sonar encompasses visual displays of interpretations of data. Such displays require very acute attention focus, as the sonar data are collected from all directions. In a NASA/U.S. Army project, positional AuSIM audio displays have been added to flight simlulators to improve human performance and effectiveness.

AuSIM has broadened its original “mission-critical” business plan to make room for human interest applications. On a general level, AuSIM’s products can be utilized in teleconferences, where spatially consistent voices can sound more natural, and in driver’s education schools, so that a student can learn to react to realistic sound events in a simulator, putting fewer people at risk during the learning and thereby creating a prepared driver for the real situation.

For future applications, AuSIM has teamed with the Girvan Institute of Technology, with the intentions of developing and capitalizing on end-user products for more key markets. The institute selects the best and most promising small companies commercializing NASA technology to be incubated and capitalized.

AuSIM3D™ is a trademark of AuSIM, Inc.

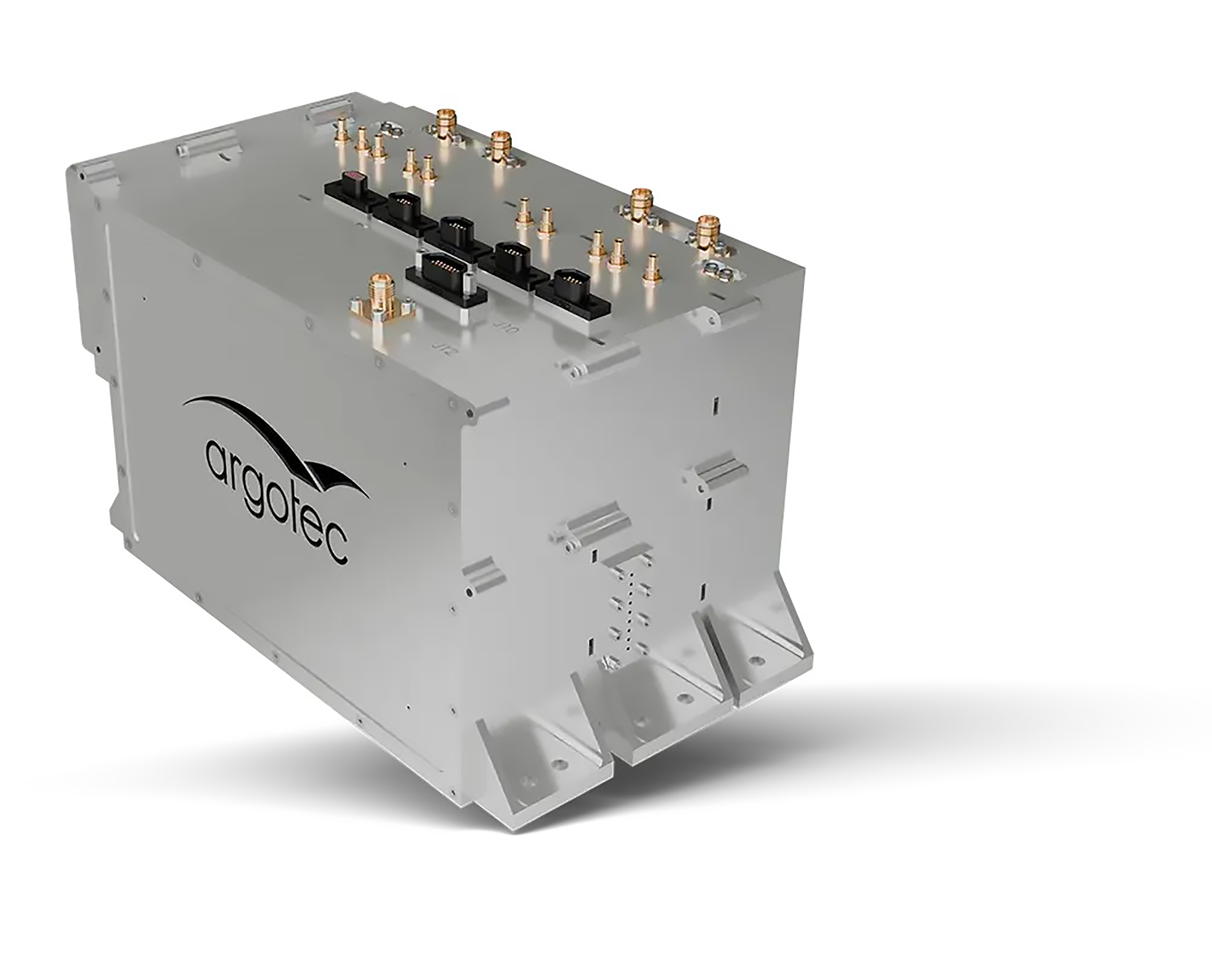

Test System for Studying Spatial Hearing through Obstructing Headgear: AuSIM, Inc.’s 32-channel, microphone-instrumented helmet provides soldiers with situational awareness of their environment, protecting them from potential ballistic, chemical, biological, optical, and percussive threats.

The 3-D Voice Communication Interface System, AuSIM, Inc.’s latest hardware product, connects to a network via ethernet. Each participant uses one system, and the headset is tracked in orientation and global position. Derivatives of this system are being developed for wearable and vehicle applications.