Flash Lidar Enables Driverless Navigation

NASA Technology

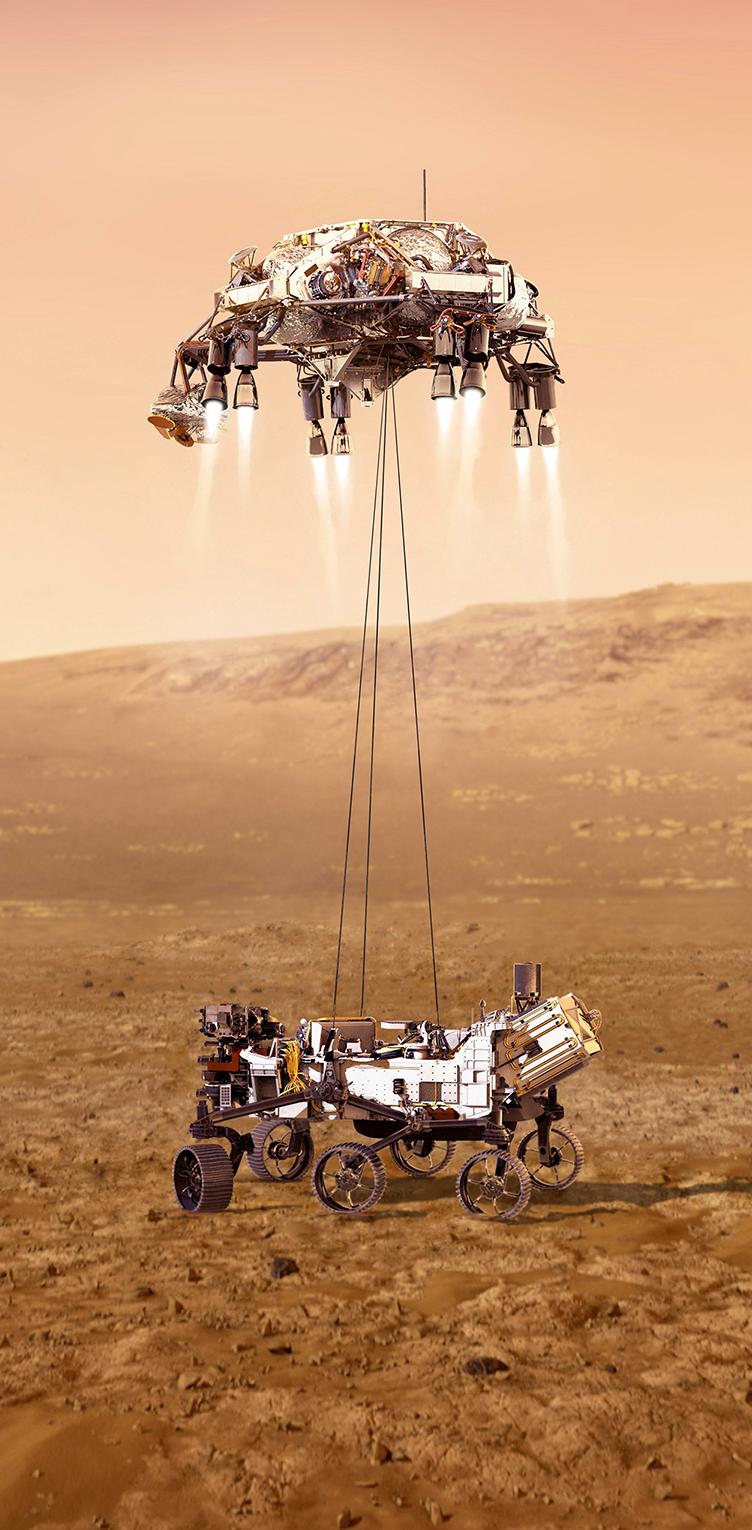

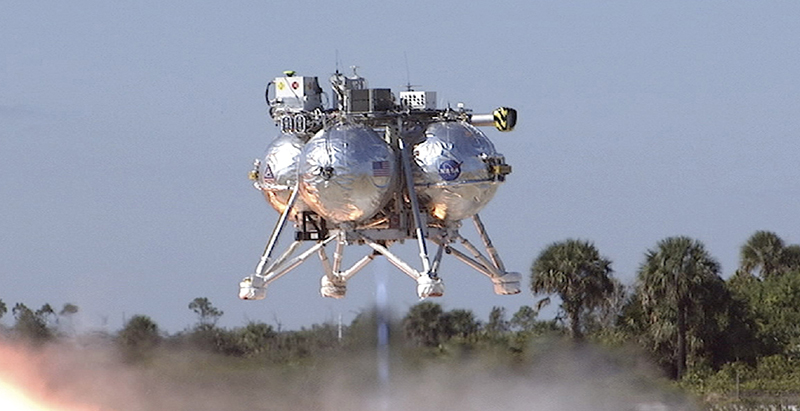

A spacecraft hovers over the gray, cratered moonscape, scanning for its landing spot, and then, in a blaze of rocket fire that kicks up a massive cloud of dust, the lander, Morpheus, lowers down, safely and steadily, to a clear spot amid the rocky, debris-covered surface.

In reality, this 2014 flight was not a new Moon landing—the carefully crafted moonscape was actually just off to the side of the runway at Kennedy Space Center. But it did demonstrate something very new: an autonomous landing enabled, in part, by a special kind of 3D imager, called a global shutter flash lidar, which is being employed to help NASA approach and sample an asteroid and could soon be helping your car safely navigate the roads.

Traditional lidar works by sending out laser pulses. As the laser mechanically scans across a scene, the device calculates how long it takes for pulses to bounce back from various surfaces. It is then able to stitch together a 3D topographical map of the scene, pixel by pixel.

Global shutter flash lidar, as the name suggests, acquires the data all at once, using a single laser pulse to generate the entire map, explains Langley Research Center engineer Farzin Amzajerdian. The pulse is received by a focal plane array with thousands of pixels, which offers many advantages—most importantly, speed. “You can have tens of thousands of pixels in one single shot of the laser,” he explains.

But it also significantly reduces the computational load, because all the data is received at the same moment and in the same physical location. In contrast, with traditional lidar, the craft carrying the device is often in motion as it sends out a series of laser pulses. “You have to keep track of that movement very precisely in order to correctly calculate how to put all these pixels together,” says Amzajerdian.

Amzarjerdian’s team at NASA began exploring the use of flash lidar as early as 2006, while working on the Autonomous Landing and Hazard Avoidance Technology (ALHAT) project, an effort that eventually led to Morpheus’ successful simulated Moon landing in 2014. “The idea there was to use a lidar like a 3D camera, so when we go to the Moon or Mars, the lander can look below and see the rocks and craters and then figure out where would be the best place to sit,” he says.

In previous missions, NASA would analyze images ahead of time to find a smooth and safe landing spot. “Going forward, now the scientists want to go to places that are more interesting. In order to do that, they can’t just pick the safest and most benign areas to go to,” he says. “If we want to go to these interesting places, we have to have an onboard hazard avoidance sensor.”

Technology Transfer

By the time the ALHAT project came around, flash lidar technology was already in development. Crucially, Santa Barbara, California-based Advanced Scientific Concepts Inc. (ASC) had already developed the focal plane array at the heart of the device, says the company’s president and CEO, Brad Short.

But it still needed work, especially for space applications, so over the next several years the Jet Propulsion Laboratory, Langley, and other NASA field centers provided additional funding through the Small Business Innovation Research (SBIR) program and other project funds. “We funded them to build cameras that we took and put in a system, and we tested it on helicopters, airplanes, and even a rocket-powered vehicle,” Amzajerdian says. “And through all this process we were working with them. When we saw problems, we tried to understand them and communicate to the company what we saw, and sometimes we’d also suggest solutions to them.”

Short credits the funding with helping the company mature the technology significantly. “The NASA funding was very instrumental,” he says. “They funded the right things for us to do.” While they may have ended up with a functional camera eventually, he says, the NASA SBIR and other funding accelerated the process by years and also helped them develop a space-ready camera that may not have been possible otherwise.

Benefits

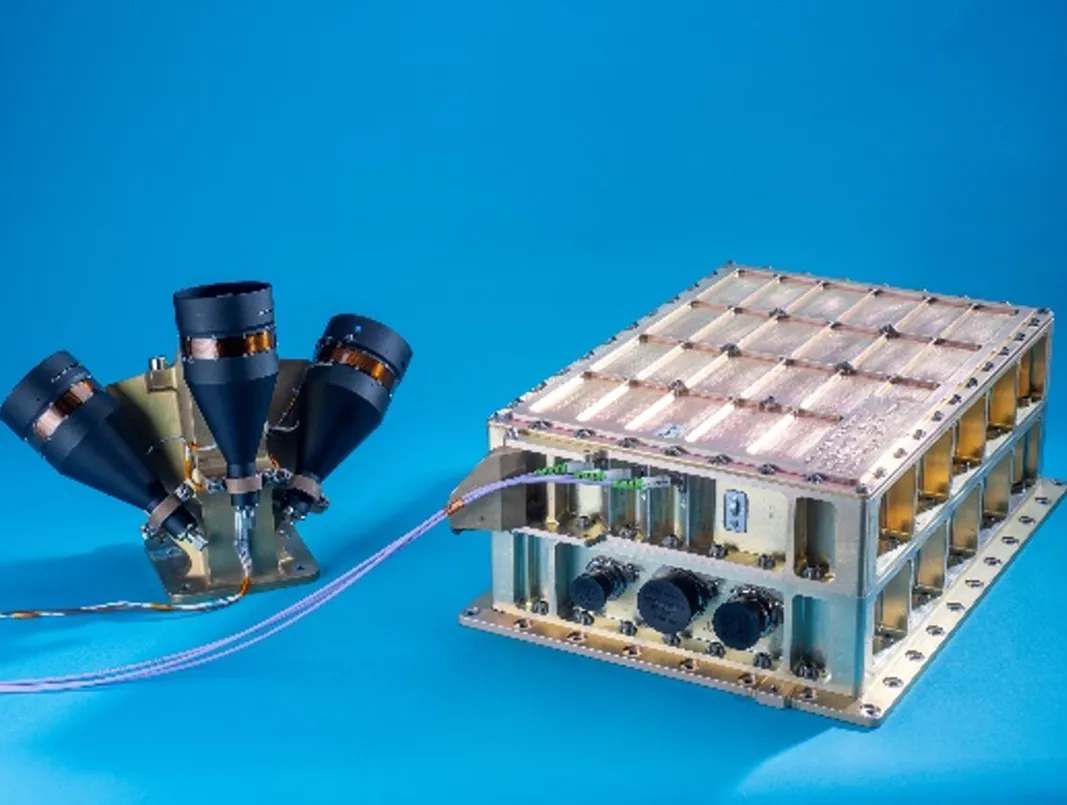

Today, ASC’s flash lidar is more than space-ready—it is a space pioneer. NASA’s Origins, Spectral Interpretation, Resource Identification, Security-Regolith Explorer (OSIRIS-REx) asteroid sample return mission has one of the cameras to help guide the final approach to Bennu, its target asteroid. “Our sensor provides the range to the asteroid and a 3D map of the region they were approaching, so they could position themselves within those last few meters.” In July 2020, OSIRIS-REx is scheduled to navigate in close proximity to target asteroid Bennu, to perform the sample return mission, thanks in part to the flash lidar guidance.

But the imager has plenty of earthbound applications as well, including for driverless cars, and in 2012, ASC began working on optimizing its technology for the road, sparking interest from a German automotive manufacturer, Continental AG.

Continental is best known for its tires, but it also makes a number of other car components for major car makers worldwide. It is also working hard on technology for autonomous driving, Short explains, and is already one of the largest makers of radar devices for cars.

In 2016, Continental bought out ASC, aiming to further develop flash lidar for the autonomous car market. “They looked at the scanning technology that is being used,” Short says, referring to traditional lidar, “and they didn’t think it would be robust enough. They wanted a solid-state, no-moving-parts system.”

ASC’s automotive flash lidar had been adapted from the space-ready devices like the one that flew to Bennu, but “the basic technology is the same,” notes Short. Among other differences, the car version is significantly smaller, four inches by four inches by two inches, about an eighth of the size needed for the space-qualified version.

But the essential benefits are the same. Most importantly, “traditional lidar would have one pixel per pulse. We have 15,000 pixels. And because we take that whole frame of data in one capture, there’s no distortion from motion,” says Short. By reducing the software processing requirements, flash lidar can identify road hazards more quickly—a crucial safety benefit when navigating roads with other drivers, pedestrians, cyclists, and more.

That reduced processing load also has other benefits, including reducing the required power from around 150 watts for a traditional lidar to just 40 for the ASC space-ready system.

Meanwhile, the original team at ASC, interested in smaller but lucrative applications like military and aerospace, founded a new company, ASC LLC, after negotiating an exclusive, royalty-free license agreement from Continental for the original technology.

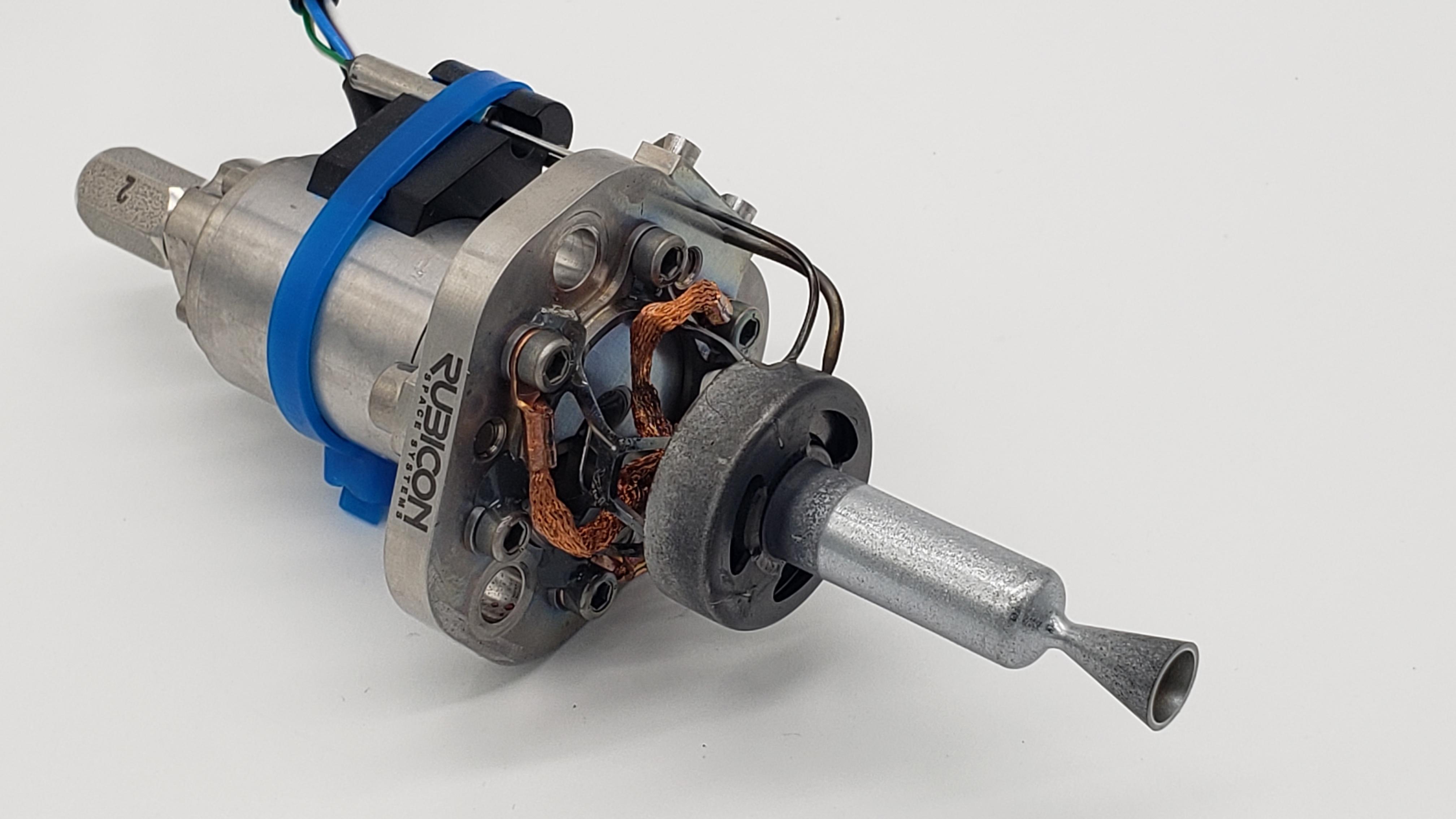

ASC LLC currently sells three systems, including the Peregrine system, its original prototype for driverless cars, as well as the TigerCub, a long-range, full-feature, general purpose flash lidar, with range capabilities of just over half a mile, and the GoldenEye, a space lidar system with a range of just over three miles.

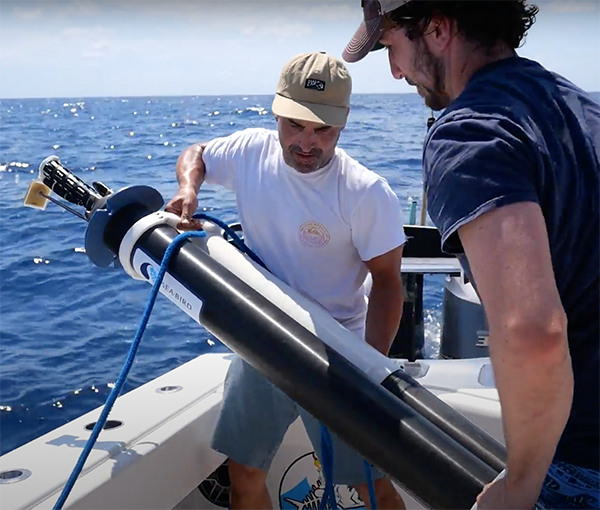

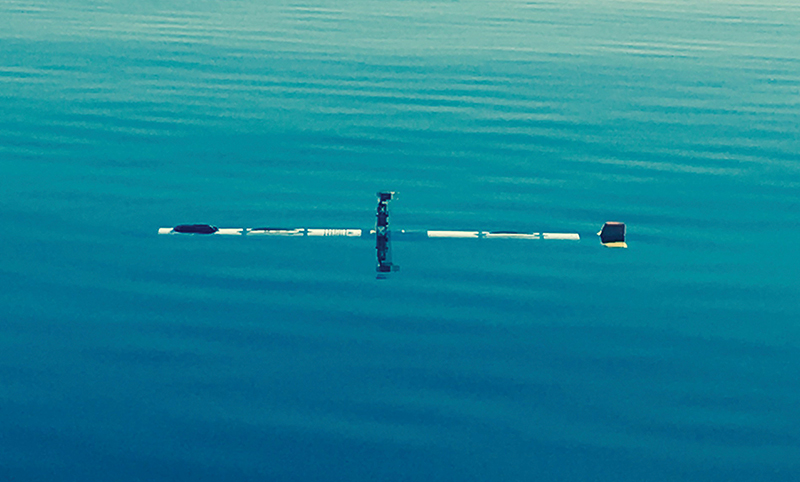

And all the ASC devices are compatible with 532-nanometer lasers, which is higher than the laser frequency used with conventional lidar systems, explains Michael Dahlin, the company’s director for business development. That enables an additional application, marine-based navigation, because the higher frequency laser is better able to penetrate water.

“The ability of our camera to produce distortion-free data, point cloud data, is enabling real-time autonomous navigation, in all kinds of applications: space platforms, airborne, terrestrial platforms, and marine platforms,” Dahlin says.

Morpheus, seen here during a test flight, is a prototype of an autonomous lunar descent vehicle. It is equipped with technology from the Autonomous Landing and Hazard Avoidance Technology project, one of the key components of which was a global flash lidar that can instantly create a 3D topographical map of the surface to help guide the vehicle to a smooth landing site.

This autonomous underwater vehicle is beginning its descent to scan a shipwreck and the sea floor with sonar. 3D images from lidar could provide additional imagery for such expeditions. While most conventional lidars do not work underwater, the ASC devices do, because they use a lower-frequency laser that can better penetrate water. Image courtesy of U.S. Navy

Advanced Scientific Concept’s (ASC) global flash lidar provides crucial safety benefits when navigating amid cars and other obstacles at high speeds, because it reduces software processing requirements to identify road hazards more quickly. That’s because it gathers 15,000 pixels with every pulse, and because the image is gathered all at once, there is no distortion from motion.