Computer Learning Program Inventories Farmers’ Fields

NASA Technology

There are now countless Earth-imaging satellites circling the globe, with more being sent up each year than the previous year. Yet with all those lenses peering down at the planet, there is still no program to stitch the images together into a complete and regularly updated portrait of Earth.

This was what GeoVisual Analytics, a relatively young company based in Boulder, Colorado, proposed: an automatically updated, global land classification map based on imagery from the Landsat mission, which captures an image of the entire planet every 16 days.

With funding from a 2014 Small Business Innovation Research (SBIR) contract with NASA’s Goddard Space Flight Center, the company set out to fuse satellite imagery with existing geographical datasets, work out land classification techniques, and enable continuous updating.

By coupling the imagery with “ground truth” data from a platform GeoVisual developed under two other SBIR contracts with NASA’s Stennis Space Center, the system would continually improve its accuracy and ability to classify ground cover. The OnSight platform developed at Stennis basically crowdsources information about conditions on the ground, letting users report details and correct inaccuracies. This would also add new layers of information to the product.

At least that was the plan. “We hoped to use this map in a number of ways,” says Dan Duffy, high-performance computing lead at the NASA Center for Climate Simulation at Goddard, who worked with GeoVisual on the project.

“One thing we would use it for would be a much higher-resolution map to put into our climate models,” he says, noting that existing models have resolution of a kilometer, while Landsat has 30-meter resolution. Climate and weather are tied to land cover, he notes. “These things are important and will get to be more important as the resolution of our weather models gets down to more human scales.”

Observing change over time is also important, and current images of the entire globe are updated only every four or five years, leading to inaccurate pictures and incomplete records of global polar ice melt, urban sprawl, and deforestation, Duffy says.

Continually updated Earth imagery can also be used for crop prediction and precision agriculture, which GeoVisual knew from the start would be its most viable commercial application. Knowing global coverage and health of a given crop would allow prediction of the year’s yield. “If you know it’s going to be low or high, you can make a lot of money,” Duffy points out.

One limitation the work ran up against, though, was that although Earth may be tiny on a cosmic scale, it is still positively huge to a computer server and the humans that operate it. “Ultimately it’s a fairly big data problem, especially with all the images from Landsat 7, 8, and soon to be 9,” Duffy says. Even NASA and the U.S. Geological Survey’s massive Land Processes Distributed Active Archive Center, which hosts all the Landsat data and NASA’s global Moderate Resolution Imaging Spectroradiometer data, isn’t particularly equipped to mine all that information. For example, Duffy says, “They don’t necessarily have the robust tools to create a global vegetation index.”

And even with datasets pushing the boundaries of manageability, GeoVisual found the resolution wasn’t high enough for the agricultural applications it was hoping to capitalize on.

Technology Transfer

“During their Phase I contract, they focused on using the Landsat data to better their classification scheme,” Duffy says, explaining that this meant developing an algorithm that could analyze imagery pixel by pixel, assigning a vegetation index number and one of 30 or 40 land-cover classifications to each dot.

That was as far as the work got, but it was enough for GeoVisual to develop what it calls its Computer Learning Imagery Platform (CLIP).

“The work for Goddard was basically land classification from satellite imagery—training algorithms to classify land types using multispectral imagery,” says Jeffrey Orrey, GeoVisual’s cofounder and CEO, noting that this could be satellite or aircraft imagery. In the end, the product the company commercialized uses the higher-resolution imagery that can be achieved by aircraft imagers.

“The methodology for processing the imagery is the same, it’s just a question of pixel size,” Orrey says. “The Goddard work really was the basis for what we’re doing now for crop detection using aircraft and drone imagery.”

The company took the technology to the 2015 THRIVE Accelerator in Salinas, California, an event aimed at boosting promising startup companies in the food and agricultural technology industries, and was named as a finalist. As part of the accelerator, the company received mentoring from Taylor Farms, the world’s largest producer of fresh-cut vegetables and now CLIP’s first customer.

Benefits

By fall of 2015, GeoVisual was working on a contract basis for Taylor Farms, using CLIP to analyze drone imagery of fields and take inventory of crops.

Using digital images, the software can determine a crop’s stages of growth and its health, allowing Taylor Farms, which partners with growers throughout the Salinas Valley, to predict yields throughout the season.

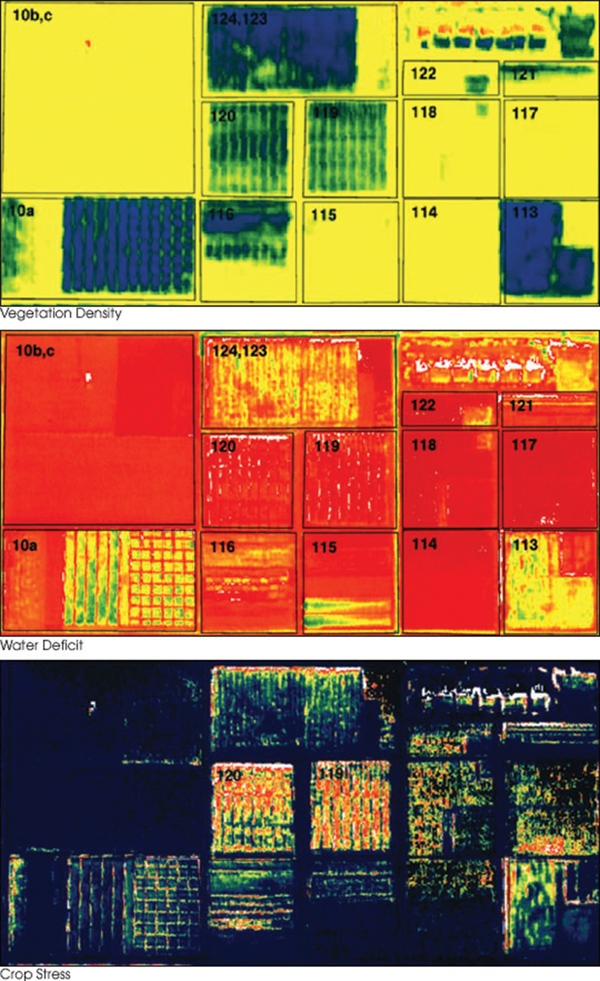

Health can be determined by the amount of infrared energy reflected relative to reflected visible light, Orrey says, explaining that healthy crops reflect more infrared light to keep cool.

He says parts of the company’s OnSight platform are also integral to the work and could become more so, for incorporating observations from the ground as well as managing data. “We anticipate that aspects of OnSight would be relevant for data management of this CLIP system.”

2015 was a big year for GeoVisual, which also was the judges’ top pick at the Western Growers Association’s Shark Tank-style Innovation Arena Workshop that November and took second place in the “pitchfest” at the RoboUniverse Conference and Expo the following month.

Moving forward, the company plans to expand its technology’s capabilities and applications, as well as the territory it covers. Further combining CLIP and OnSight could lead to forest monitoring, for example. Conservation International is already using OnSight to monitor fires in the rainforests of Peru and Madagascar (see page122). “Agriculture and forestry are not unrelated,” Orrey says. “Food security, forestry, and forest health are all very connected.”

For now, though, he says, “We’re very focused on agriculture, and our goal is to become the leading computer vision platform for agriculture.” Eventually, the company wants to move toward the global crop mapping that was part of the initial goal of its Goddard work and would enable prediction of the world’s staple crops.

“As we grow, we anticipate using these technologies on a global scale,” Orrey says. “Especially when we go international, which we plan to do.”

A wealth of information can be gathered about vegetation using hyperspectral imaging, such as vegetation density, top; water deficit, middle; and crop stress, bottom. Using algorithms and techniques it developed with funding from Goddard Space Flight Center, GeoVisual Analytics uses images of farmers’ fields to let them learn more about their crops, including predicted yields.

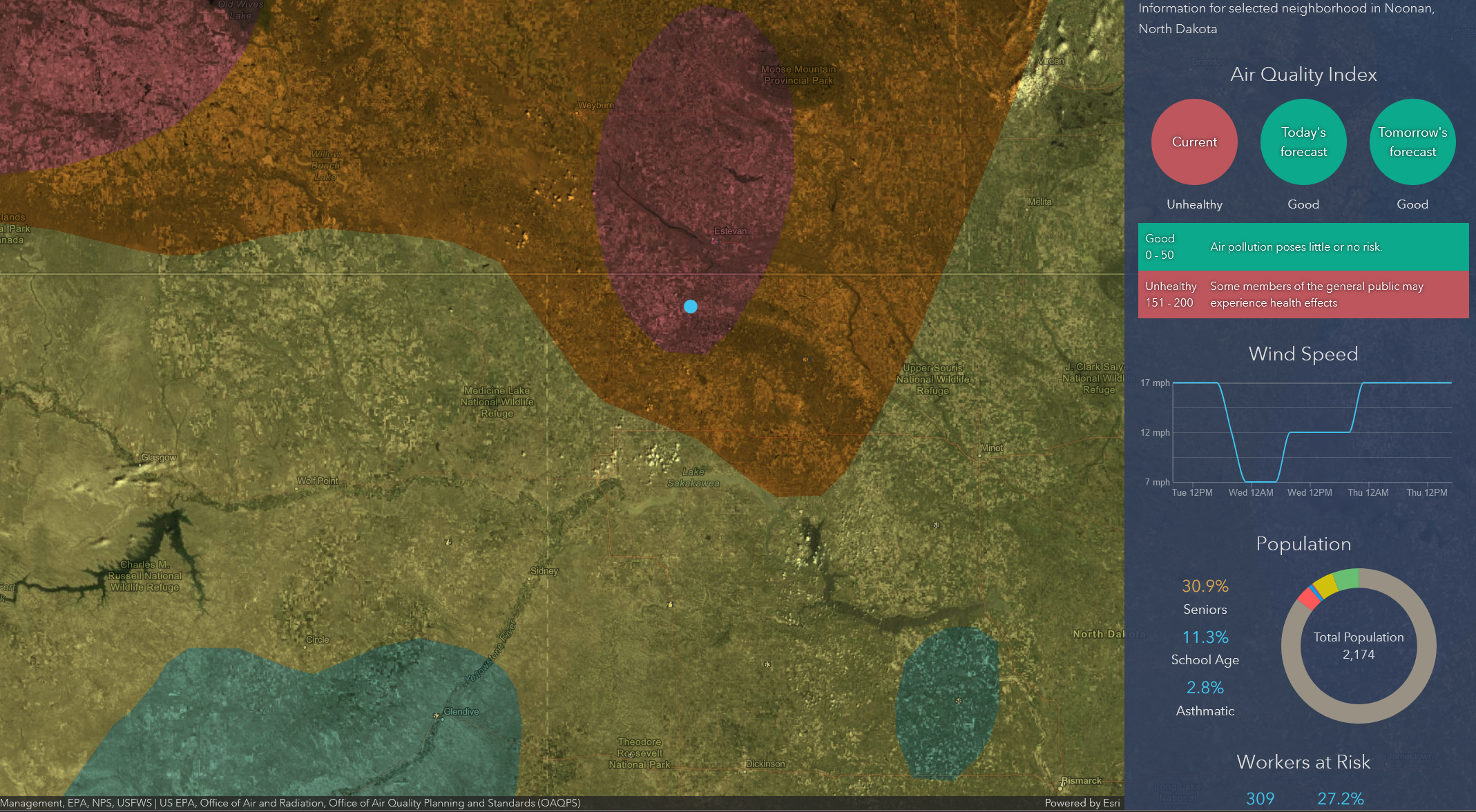

The Computer Learning Imagery Platform (CLIP) that GeoVisual Analytics developed with NASA funding was designed to map global land cover classifications using satellite imagery. To chart farmers’ fields, the company uses CLIP to analyze higher-resolution drone imagery.